MCBTH Interactive Projection: Technical writeup

Guy Cassiers (Toneelhuis) and Dominique Pauwels (LOD) directed and composed the music for MCBTH, Hangaar made the video-design and developed the software for the interactive projections. More info about the play on Toneelhuis.be

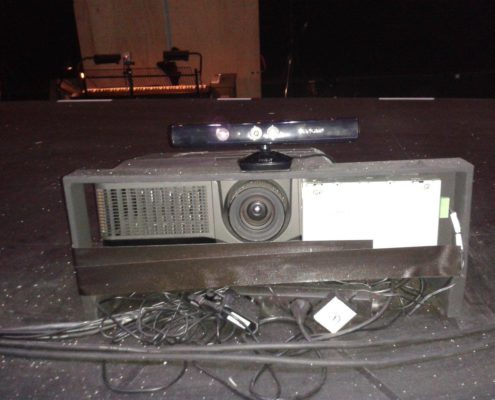

The visuals are interactive and based on the actors’ movements and position. Mainly, aura’s with artistic content had to be projected on and around the actor(s) in a range of 1 to 8 meters of depth from the kinect. The first component necessary is the kinect-projector calibration: we have to know, based on the position of the actor, where to project. For this, an openframeworks addon was created to calibrate a kinect and a projector. This can be found here: http://github.com/Kj1/ofxProjectorKinectCalibration. The code is an adaptation of Elliot Woods’ VVVV patch (see here) using Kyle McDonalds ofxCV addon. This video shows the calibration process in action, which takes only a few minutes:

MCBTH Kinect-projector calibration from Hangaar.net on Vimeo.

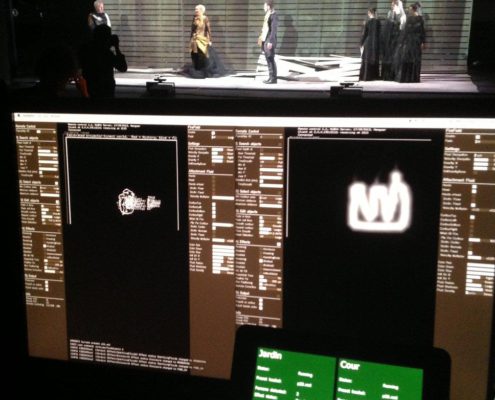

The stage is very wide, and requires two non-overlapping kinects to work. We created two “interactive spaces”, one for the Jardin (left) and Cour (right) part of the stage.

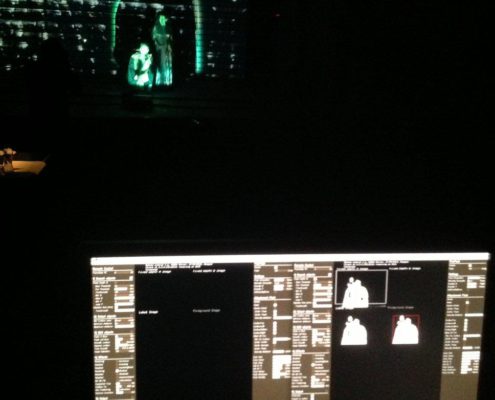

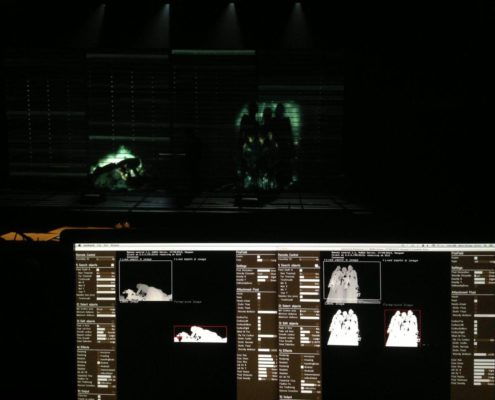

The kinect input was first processed to fill in the depth gaps, remove the noise, and the like. Raw kinect data, especially beyond 4 meters, can be quite tricky. Next, person detection was performed (using the kinects person tracking for less then 4 meters, and adaptive background subtraction for >4 meters). The contour/silhouette was then calibrated to the projector space using the addon above, resulting in a jittery and “squary” line around the actor. This result was further processed to have a more stable result, using simplification, smoothing & time averaging. Finally, effects could be applied on this processed contour: create an aura around the actor, project lines around them, create fake blood on the hands, put the actors on fire, etc. The resulting output was used as a dynamic grayscale mask, applied to custom made video, resulting in a dynamic video overlay on the actors. The actual content of the projection was made during the play in line with the directors’ artistic view, while the area to project the video onto (the mask) is determined realtime using this system. This way, we had a greater flexibility in adding/changing the actual “feel” of the projection.

Some screenshots behind the controlling desk with some additional info: