WOTH: Technical writeup

Goal of the project

We were asked to provide partly interactive visuals for the WOTH tour by Liesa van der Aa. The setup was quite big: one 7m x 9m semi-transparent sheet as back projection, and the same as floor projection. The top screen would be where most information is shown while the second floor projection acts as a mirror and a starting point for the visuals.

There were two parts in the visuals of the show. The first was a montage of a pre-record an actor (see here), while the second part started from a realtime visualization of the mirrored contour of Liesa’s figure which then transformed into elements for the show (sketches by Afreux Beeldfabriek).

This technical write-up mainly focuses on the second aspect: providing realtime visuals in a strict theater production environment.

The story told

Liesa is judged by 42 people after defending herself by performing a musical set. The judge is personified by a choir and the master of ceremony is called ‘Toth’, sometimes iconified by a baboon or ibis bird. The idea is to transform Liesa during these judgement parts into Toths diverse representations. Her personal representation goes on the floor projection, to act as a realtime mirror. From there we transform gradually to the back projection and Toths representations. So the system needs live input and fluently converting to sketches of ibis birds.

Capturing Liesa’s contour & calibration.

For capturing Liesa’s contour, we used a Kinect V2 device. The V2 is far superior to the first kinect because it can handle different lights easily – while the Kinect1 really got bad results in IR-emitting lights (such as the typical par spots). However, software-wise, the kinectV2 is not as broadly and out-of-the-box supported by the creative coder communities (as this is a pretty new device, support is growing rapidly). As a starting point, we used Openframeworks to write our own software, with some community plugins: the kinectV2 common Bridge and ofxKinectV2 by JoshuaNoble. After some tweaking this was ready to go. (see link below for our version) We used the calibration system described here to match kinect data and the physical projection location (published as openframeworks addon). However, the KinectV2 imposed us with another problem: the maximum distance between kinect and computer was 6m due to the limitations of USB3. There are some solutions to increase cable length, ie USB3 fiber cable extension sets as mentioned in the kinect form post here, but they were impractical and costly. We therefore opted to split the system: the visual engine and the kinect input on two separate PCS, connected using a network. The kinect computer streamed the calibrated data to the main visual computer where it was received by another Openframeworks program for generating the visual end-result.

Openframeworks visuals: contour morphing, mycelium & insects

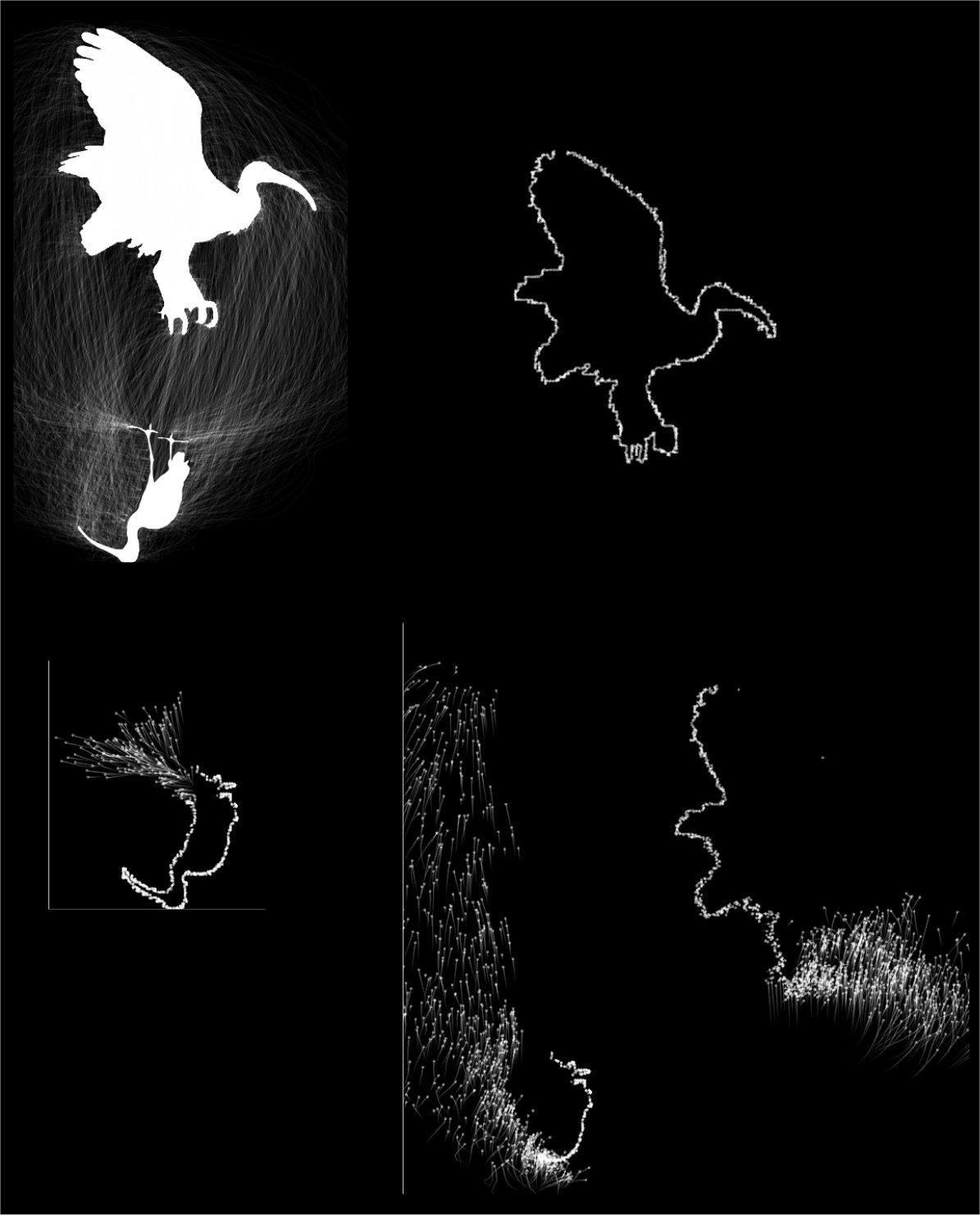

The visuals lean on the idea of transforming one abstract form (live contour, pre-made ibis bird sketches, sprite sheets, image/video, …) into another without completely showing beginning or end, and being mysterious in the middle part of the transition (it shouldn’t be clear what you are transforming into until its slam bang in your face). This is an example of such a sketch:

Our first attempt was contour morphing: various ways of transforming one contour into another. While hypnotizing in the right conditions, it was not the end goal because also some content should be visible inside the contours to tell the story.

Contour morphing experiment from Hangaar.net on Vimeo.

Adding some randomness into the paths and keeping them visible, we got a web-like structure, also pleasing but it didn’t solve our issue. It looked like this when transforming between two bird sketches:

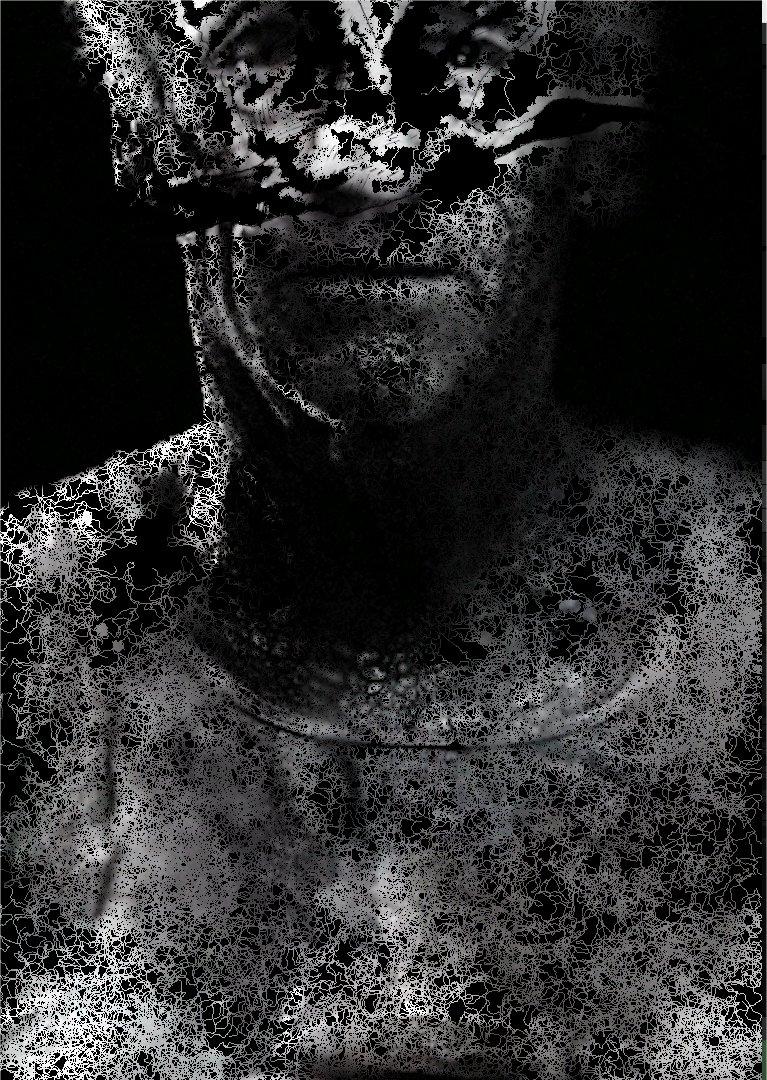

Back in 2010, this awesome article about a processing sketch emulating Mycelium growth was published. It was created by Ryan Alexander. Mycelium is a simulation of fungal hyphae growth using images as food. Our visual style for the real-time aspect was hugely inspired by this post. With an openframeworks recode of the processing sketch found in the comments of the above post, this tryout was made:

Mycelium Experiment from Hangaar.net on Vimeo.

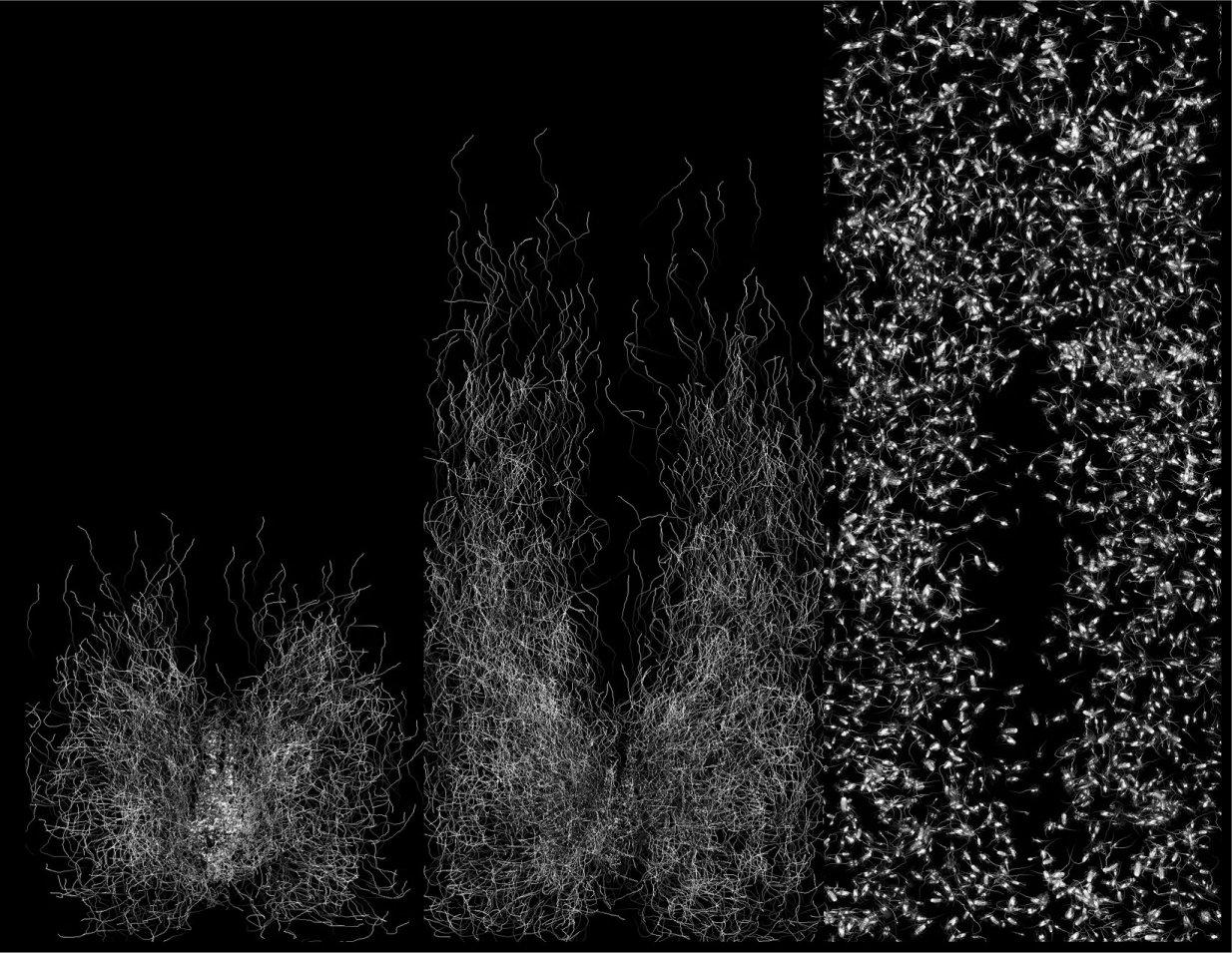

While the mycelium style and concept worked very well on still images, it did not work very well on live input as it took a lot of time to ‘grow’ into the new input. Hence we restarted from scratch, but using the basic concept of mycelium: moving particles which eat away the input source. For making it work in real-time; we limited the trail length so each new frame new ‘food’ was added for the particles. In the following video, a new frame is added every 5 seconds or so:

Mycellium Buildup from Hangaar.net on Vimeo.

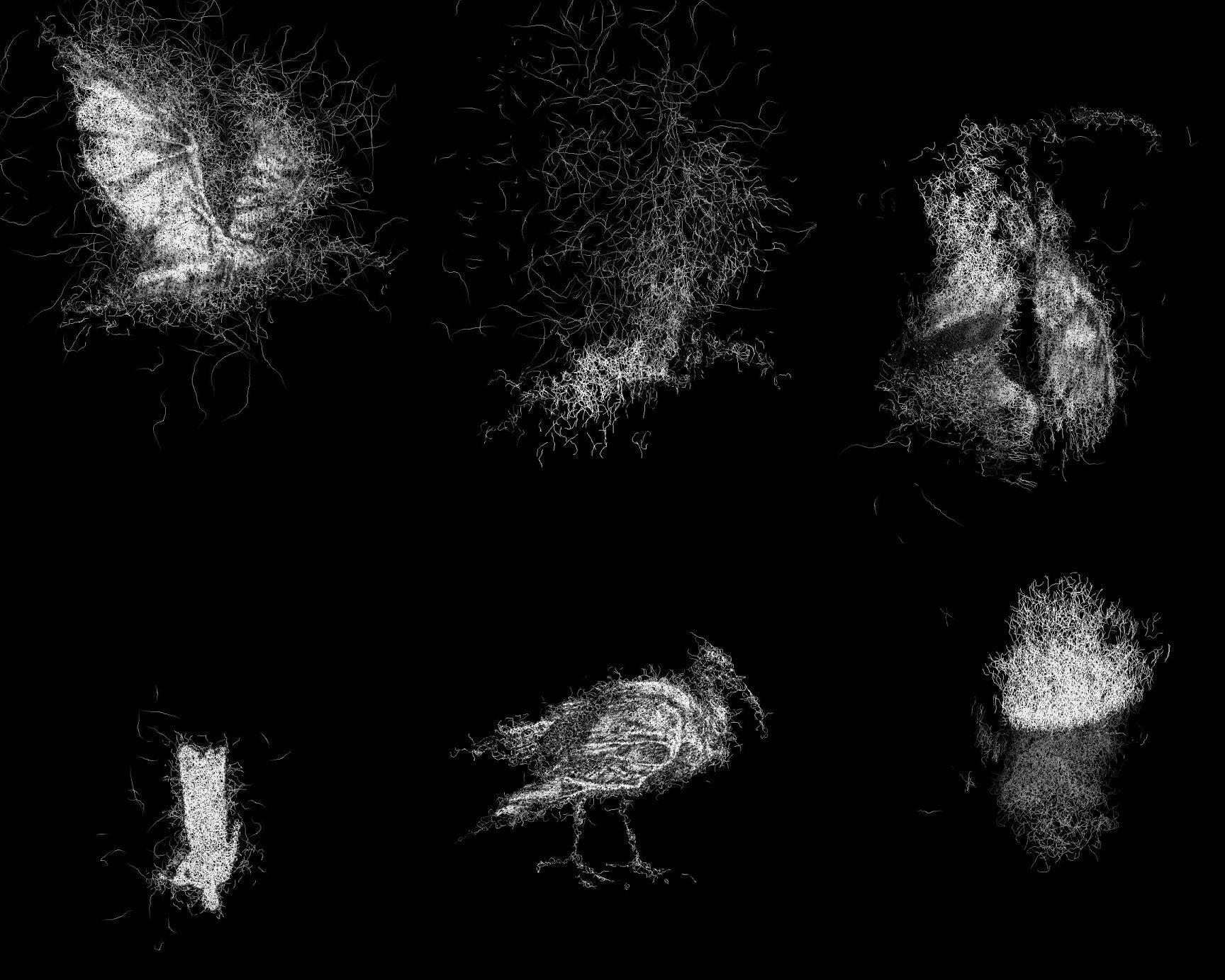

Now that was sorted out, we added the live input as another source. The human contours were filled with gradients and added to the mycelium sketch once every second (so it did feel responsive, but the mycelium needed some time to grow into the new figure each time). Hence contours were never simply contours, but there were particles ‘alive’ inside the contour making a less-obvious but livid contour image. As a bonus, the Kinect noise and detection errors were blended in the bigger image. After some time, the input image (the realtime contour) was blended with sketches from Afreux Beeldfabriek of the Ibis bird so the particles started eating away this sketch. New frames/sources were then added while the contour faded away; but the mycelium kept eating and forming images. This way, we started from live input and ended with mycelium-versions of the sketches in a smooth movement. Parameters were modulated live to match the scene in the theater.

For added excitement, the concept of ‘wanderers’ was implemented. In the mycelium, particles that didn’t got alot to eat died away – but in our case some got a second chance by wandering randomly in the image leaving large trails behind (see above). The amount of wanderers was modulated with music climaxes: more randomness and vividness in the image when the music reached high amplitude.

Finally, a last version was made that produced alot of wanderers to generate an image full of particles, but all originating from Liesa’s realtime contour. When the screen was full, the contour disappeared and the wanderers changed into walking insects.

Getting the visuals from Openframeworks to Resolume: Spout!

But the show was more than just these Openframeworks sketches: there was pre-recorded video, projection mapping to the stage, synchronized music output, a cue system, etc. Instead of writing our own video playback & mapping system, we used Resolume Arena. This supports all needed features out of the box.

Getting our own Openframeworks visuals into Resolume was a different task. On Mac OSX, we all know Syphon, to get your images from one program into another using only the very efficient GPU memory. Syphon does not support Windows which we use on our projection computer. Resolume also supports FFGL plugins (based on OpenGL) but converting our Openframeworks sketches into native FFGL would be very impractical. Some wrappers exist for building Openframeworks sketches as FFGL plugins, but its not quite there yet.

We were in luck: about a year ago, progress was made towards a Syphon alternative on windows, called Spout . It shares textures on the GPU between different OpenGL and DirectX 9/11 programs, and they made a FFGL receiver which can be used in Resolume!

We were in luck: about a year ago, progress was made towards a Syphon alternative on windows, called Spout . It shares textures on the GPU between different OpenGL and DirectX 9/11 programs, and they made a FFGL receiver which can be used in Resolume!

Recently Spout2 was released, a huge improvement over the first version in terms of compatibility (different texture types supported, nice SDK, openframeworks plugins, etc). We used the SDK from their github in our openframework sketches to get our end-result into resolume, with succes! You can find our ofxSpout2 plugin on github which simplifies the texture sending from openframeworks (sending textures in just one line of code: spout.sendTexture(string senderName, ofTextureReference& texture); with support for multiple senders).

We did encounter some stability issues when closing/opening alot of receivers and senders, but after using it a while we just realized changing texture resolutions and types in openframeworks was a bad idea without recreating a new spout sender. Another issue was a huge memory leak: almost 1MB of ram used and not cleared for each send frame! It was posted on the Spout2 github; the memory leak was due to some bug in the Nvidia drivers and fixed by updating to the newest drivers (which is always very dangerous for a production computer used in multiple shows, but we did anyways and it worked!).

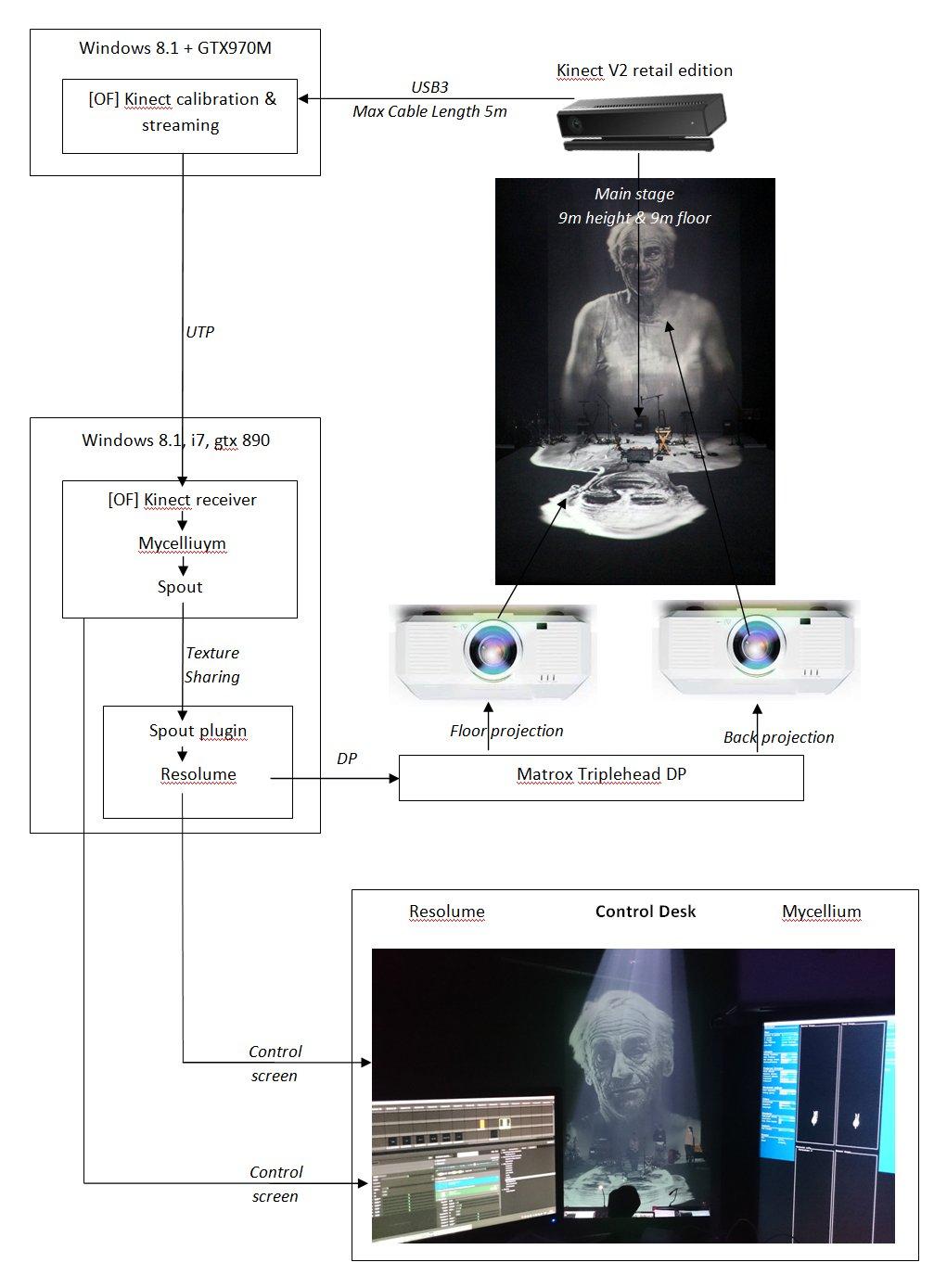

Shematic

This image shows the final system and all components.

For the time being, a short clip can be found here which show some visuals during the development: http://cobra.be/cm/cobra/videozone/rubriek/muziek-videozone/1.21684386

Gihub repo’s for stuff created:

- Edited Kinect V2 backend: http://github.com/Kj1/ofxKinectV2

- KinectV2 Calibration: http://github.com/Kj1/ofxKinect2ProjectorCalibration

- Spout addon: http://github.com/Kj1/ofxSpout2